Nano Banana: How Google’s Gemini 2.5 Rewired Image-to-Image AI for E-commerce and Beyond

What happens when an anonymous model with a quirky codename crashes the leaderboards of an AI benchmarking arena? You get Nano Banana—Google’s Gemini 2.5 Flash Image model. Initially appearing as a mystery contender, Nano Banana quickly rose to prominence thanks to its unmatched ability to preserve character identity, perform multi-turn edits, and deliver results in near real time. For the e-commerce and marketing worlds, this isn’t just a cool trick. It’s a structural shift in how visual content is created, tested, and deployed.

This article unpacks Nano Banana’s viral rise, its technical breakthroughs, where it outshines rivals like DALL-E 3, Midjourney, and Flux, and how it’s reshaping workflows in e-commerce, marketing, and the creator economy. We’ll also explore its limitations, Google’s roadmap for on-device integration, and what it means for product-driven advertisers in the age of generative AI.

Origins & Viral Launch

Nano Banana’s debut was anything but traditional. In early August 2025, the model appeared anonymously on LMArena, an AI battle platform where models compete head-to-head. With no branding or marketing push, Nano Banana had to prove itself purely on merit. And it did.

Users noticed its unusual strengths: character consistency across edits, contextual awareness, and faster turnaround times. As outputs spread across X (Twitter) and Threads, the codename stuck. Google employees cheekily dropped banana emojis, fueling speculation. When the official reveal came—Nano Banana was indeed Google’s Gemini 2.5 Flash Image—the hype had already done its job.

Live demos from creators on X show how quickly Nano Banana gained traction. For example, this user test highlights how Nano Banana handles complex edits in seconds, fueling buzz across AI and design communities.

Core Features and Breakthroughs

1. Character & Identity Consistency

Previous image models notoriously struggled with identity preservation. A single edit could distort a face or change eye color. Nano Banana cracked this challenge, enabling a consistent subject across multiple transformations—whether swapping hairstyles, recoloring outfits, or shifting lighting.

2. Conversational, Multi-Turn Editing

Nano Banana allows sequential edits without resetting the image. For instance, users can add a bookshelf to a room, then place a sofa in the most logical spot—all while the model remembers context.

3. Natural Language as Interface

Forget layers and masking. Simply type: “make the shirt navy blue under soft morning light.” The model applies edits contextually, democratizing advanced editing for marketers, designers, and small businesses.

4. Speed & Efficiency

Latency matters. Creative workflows demand instant feedback. Nano Banana typically processes edits in 3–10 seconds, compared to 30–60 seconds for many competitors.

5. Breadth of Operations

From inpainting and outpainting to background swaps, style transfers, and object insertions, Nano Banana covers most editing needs within one interface.

Competitor Comparisons

Nano Banana vs. DALL-E 3 (OpenAI)

- Strengths: Superior realism, faster turnaround (<10s vs ~60s), consistent identity preservation.

- Weaknesses: Still weaker at long-form creative generation compared to DALL-E’s “from-scratch” artistry.

Nano Banana vs. Midjourney

- Strengths: Photorealism and contextual edits.

- Weaknesses: Midjourney dominates stylization and artistic flair.

Nano Banana vs. Adobe Firefly

- Strengths: Simplicity—natural language replaces layers.

- Weaknesses: Lacks Adobe’s fine-grain professional controls; struggles with text rendering.

Nano Banana vs. Flux

- Strengths: Speed (3–10s vs 60+ seconds).

- Weaknesses: Flux edges ahead in hyper-real photorealism, but at a heavy compute cost.

Industry Impact

E-commerce: Product Photography Reinvented

Brands spend millions staging photoshoots for every SKU variant. Nano Banana collapses that workflow: one base image, infinite iterations. A retailer saved $2.3M annually by swapping photoshoots with AI-driven outputs. Virtual try-ons have reported a 34% increase in conversion rates, reshaping online shopping UX.

Here’s where Dataïads enters the picture. While Nano Banana refines visual editing, platforms like Dataïads optimize product data and feeds. Together, they create a flywheel: clean, enriched product attributes fueling AI visuals that convert faster. For retailers, it’s no longer about one-off campaigns, but activating product intelligence across visuals, feeds, and landing pages.

Marketing & Media: Campaigns at Warp Speed

What took days of coordination between photographers, designers, and retouchers can now be executed in hours. From polished headshots to customized ad creatives, Nano Banana accelerates campaign cycles and scales personalization.

A practical workflow for beauty and fashion brands has already surfaced on X here:

- → Send product image

- → Nano Banana creates hyper-realistic models showcasing your product

- → Thumbs up/down approval system for quality control

- → Kling AI transforms approved images into professional video ads

- → Final videos delivered in seconds

This illustrates how Nano Banana slots into multi-AI pipelines for social ads in e-commerce beauty, where speed and aesthetic fidelity directly influence ROAS.

Creator Economy: Power to Individuals

Influencers and small brands gain access to pro-grade editing without Adobe expertise. From consistent character-driven comics to retro family photo restorations, the playing field is leveling.

Technical Limitations and Challenges

Nano Banana isn’t flawless:

- Multi-edit degradation: Repeated edits can blur details.

- Inconsistent processing: ~50% of broad prompts may return unchanged.

- Diversity gaps: Struggles with ethnic representation.

- Physics comprehension: Weight, volume, and spatial logic occasionally fail.

- Resolution limits: Outputs capped at 1024×1024 px.

- Mandatory watermarking: Every image carries Google’s invisible SynthID.

Future Roadmap & Strategic Implications

On-Device “Nano” Models

Google’s codename wasn’t just playful. “Nano” signals ambitions for compact, on-device AI. Imagine Pixel phones with built-in, real-time editing—privacy-safe, offline, and instant.

Beyond Images: Toward Video

With frame-to-frame consistency already solved in images, video is the next logical step. Integration with Google’s Veo 3 could extend contextual edits into motion.

Hardware Ecosystem Moat

If Nano Banana becomes a Pixel-exclusive feature, it could tilt the smartphone market. On-device AI would create stickiness competitors like Apple will struggle to match.

From Tools to Workflows

The broader shift isn’t about AI as “generators” but as creative partners. With APIs available through Google AI Studio, developers are embedding Nano Banana into workflows spanning e-commerce, media, and even AR shopping.

Conclusion: Key Takeaways & Outlook

Nano Banana isn’t just hype. It’s a decisive top performer—a paradigm shift in AI image editing. For marketers and e-commerce players, its promise lies in speed, consistency, and lowering barriers to high-quality visuals.

- It democratizes pro-grade editing, moving value from technical skills to creative strategy.

- It redefines workflows in product photography, campaign creation, and influencer branding.

- It positions Google for a future where AI is native to devices, not just the cloud.

Already available in the GenIA platform for e-commerce optimization Dataïads, take advantage of the power of Nano Banana (Gemini 2.5) and other multimodal models on the market to boost your productivity and creativity, and give a new dimension to the production of your visuals, assets and e-commerce content. Request a demo now.

Continue reading

Top 10 Global Marketplaces 2025 — A complete overview of global e-commerce

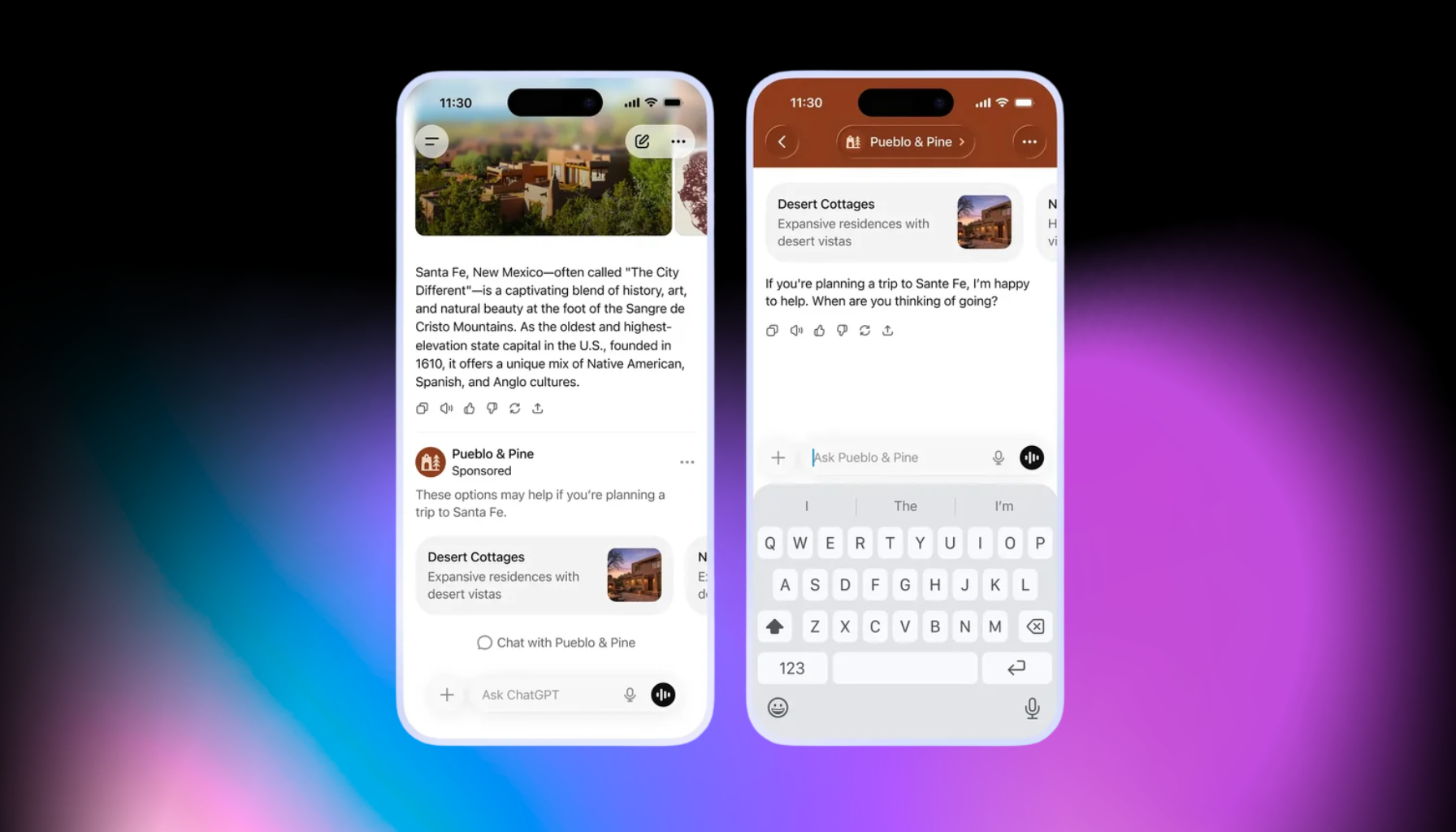

ChatGPT Ads: How Conversational Advertising Is Changing the Rules of Digital Marketing

Google UCP & OpenAI ACP: 2026 expert checklist to be ready for agentic commerce

.svg)