AI generated content in e-commerce: what legal obligations and what business impacts - 2026 edition

Generative AI has established itself in e-commerce at breakneck speed.

Automated product descriptions, advertising visuals generated from the feed, catalog videos, dynamic landing pages, personalized recommendations... AI is everywhere in the acquisition tunnel.

But while marketing teams are accelerating, The regulatory framework is rapidly catching up with uses.

AI Act in Europe, national laws, American, Chinese or British regulations: the generation of content by AI is no longer an “innovation” subject, it is now a subject of compliance, responsibility and governance.

For online retailers and international advertisers, the question is no longer an issue. Should we use AI, but How to use it without risking your campaigns, your brand and your turnover.

In this article, we take stock of:

- The key legal obligations related to AI-generated content,

- what this means in concrete terms for e-commerce and paid media,

- and how to structure an AI approach efficient and compliant, without hampering innovation.

Why the regulation of AI content directly concerns e-commerce

Generative AI goes to the heart of modern e-commerce: product content.

Titles, descriptions, attributes, images, videos, videos, videos, reviews, reviews, reviews, landing pages, advertising creations... Anything that influences the purchase decision can now be generated or modified by an AI.

However, these contents have three sensitive characteristics:

- They directly influence the consumer

- They are disseminated on a large scale

- They can Mislead if they are not controlled

Precisely for this reason, regulators decided to act.

According to the European framework, any synthetic content generated by AI used in a commercial context must now comply with principles of transparency, traceability and responsibility.

The European AI Act: the new global standard

An extraterritorial framework

The AI Act (European regulation 2024/1689) applies to any company that markets or distributes AI content in the European Union, even if the technology is developed outside the EU.

In other words:

- An American e-merchant

- An Asian marketplace

- an international brand

👉 all are concerned as soon as their content reaches European consumers.

What Article 50 imposes in practice

As of 2026, Article 50 of the AI Act requires that:

- AI-generated content is identifiable as such,

- via a machine-readable marking (metadata, watermarking),

- and, in some cases, via a information visible to the user.

This concerns:

- automatically generated texts,

- advertising images and visuals,

- videos and audio content,

- deepfakes or modified content.

Exceptions exist (artistic content, satire), but they are very supervised and rarely applicable to e-commerce.

France, United States, China: different approaches, the same logic

In France: reinforced transparency

France goes further on certain uses, in particular on:

- images generated or retouched by AI,

- commercial influence,

- editorial responsibility.

The objective is clear: avoid any confusion between human content and synthetic content.

In the United States: the FTC on the front line

There is no single federal law, but the FTC has already banned:

- fake reviews generated by AI,

- fictional testimonies,

- the endorsements presented as human.

Penalties may exceed $50,000 per violation

In China: the strictest line

China imposes a double marking mandatory for all AI content:

- visible to the user,

- invisible via metadata.

Without artistic exceptions.

The logic is simple: maximum control of information.

Copyright: Who does AI-generated content belong to?

This is one of the most misunderstood points by marketing teams.

Fundamental principle

In European (and French) law, Only a human can be an author.

Content automatically generated by an AI is not protected by default.

How to secure content ownership

However, a company can claim rights if it is able to prove:

- significant human intervention,

- editorial and creative choices,

- validation and responsibility assumed.

Concretely, this involves:

- keep the prompts,

- document the generation rules,

- trace human validations,

- archive successive versions.

👉 Without this traceability, AI content can become legally fragile.

Why product flow is becoming a legal issue, not just marketing

For a long time, the product feed was considered to be just technical support for Google Shopping or Meta. Today, it is becoming a major source of AI-generated content.

A feed feeds descriptions, images, videos, post-click pages, and ad creations. Any error, approximation, or inconsistency in the data produced can be amplified by AI.

This is where regulatory issues directly meet business challenges.

An incomplete or poorly structured feed can lead to incorrect product promises, misleading visuals, or poor article qualification. In the long run, this can expose the brand to sanctions, but also degrade trust and performance.

AI, performance, and compliance are not incompatible

Contrary to popular belief, compliance is not a barrier to performance.

It even becomes a lever for scalability.

A well-governed AI allows:

- to automate without losing control,

- to test more quickly,

- to deploy internationally without multiplying risks,

- to secure media investments.

In fact, this is the approach adopted by the most mature e-commerce platforms.

How to structure effective AI governance in e-commerce

1. Identify content that is actually generated by AI

It all starts with a clear map:

- texts produced,

- pictures,

- videos,

- post-click pages,

- advertising creations.

2. Classify uses by risk level

An advertising visual does not have the same impact as an informative text or a customer review.

Each type of content needs to be evaluated.

3. Implement native traceability

This is where technology plays a key role.

The solutions connected to the product feed allow:

- to centralize the data,

- to historize the transformations,

- to document the enrichment rules,

- to prove human intervention.

The key role of product-centric platforms

“Black box” AI tools are rapidly becoming problematic in this regulatory context.

Conversely, an approach Product-centric, based on product data, allows:

- fine control of the content generated,

- consistency between pre-click and post-click,

- better legal readability.

That's exactly the logic behind it:

- the enrichment of structured feeds,

- landing pages generated from qualified data,

- advertising creations linked to the reality of the catalog.

Towards a more responsible and efficient use of AI in e-commerce

The regulation of AI is not a parenthesis.

It is a new sustainable framework, which will structure e-commerce for years to come.

The brands that will do well will be those that have understood that:

- the quality of the product data is central,

- traceability is a competitive advantage,

- compliance is an accelerator of trust,

- The AI must remain controlled, not subject to it.

The regulation of content generated by AI marks a new stage in the maturity of e-commerce. It requires brands to move from a logic of opportunistic experimentation to a structured, governed and sustainable approach.

Successful businesses will not be the ones that use AI the most, but those that know how to use it intelligently, based on reliable product data, in a clear and controlled framework.

This is exactly the ground on which Dataïads supports the most demanding e-retailers.

FAQ — generative AI and e-commerce content

Does all AI-generated content have to be flagged?

Not always in a visible way, but always trackable.

In the European Union, the AI Act requires that content generated or modified by AI be identifiable as such, at least via machine-readable mechanisms (metadata, watermarking). Information visible to the user becomes mandatory when there is a risk of confusion or deception, in particular for visuals, videos, deepfakes or advertising content.

Is an AI-generated product description covered by the AI Act?

Yes. A product description generated automatically for commercial purposes is fully within the scope of transparency obligations. Even if no visible mention is always required, the company must be able to prove that the content comes from an AI system, document its generation process, and assume editorial responsibility.

Are AI-generated product images riskier than text?

Yes, clearly. The images, virtual models and advertising visuals present a higher risk of deception for the consumer. This is why several jurisdictions, including France and China, already impose or will impose explicit tagging for images generated or modified by AI, especially when they alter the perception of reality.

Can AI-generated models be used for e-commerce?

Yes, but under strict conditions.

The consumer should not be misled about the nature of the mannequin. If the avatar is an artificial creation or a digital replica, transparency becomes essential. Contractually supervised projects, with the consent of the persons concerned and clear communication, are legally stronger and better accepted.

Who does AI-generated content belong to?

By default, To no one in terms of copyright, if no significant human intervention is demonstrable. In Europe, only a human can be recognized as an author. However, a company can claim exploitation rights if it is able to document creative choices, generation rules, human validations, and editorial responsibility.

Should generation prompts and rules be maintained?

Yes, it is highly recommended.

The conservation of prompts, parameters, enrichment rules and human validations is now one of best legal protection levers. In the event of a dispute or audit, this documentation makes it possible to demonstrate the control exerted over the AI.

Are fake AI-generated customer reviews allowed?

No They are explicitly forbidden.

In the United States, the FTC heavily sanctions fake reviews and testimonials generated by AI. In Europe, these practices fall under the rules on deceptive commercial practices. Sanctions can be financial, but also reputational.

Is the product feed affected by AI regulations?

Indirectly, but critically.

The product feed has become the Generative AI raw material in e-commerce. Inaccurate, incomplete, or poorly structured data can lead to misleading, automatically generated content. Regulators do not punish the feed as such, but its consequences on the information provided to the consumer.

Is generative AI complicating GDPR compliance?

Not necessarily, but it reinforces requirements.

As soon as an AI processes personal data (recommendations, personalization, automated decisions), the GDPR continues to apply. This implies a clear legal basis, user information and, in some cases, a right of opposition or human remedy.

Is AI compliance holding back marketing performance?

No It's a false dilemma.

The most advanced businesses use compliance as a scalability lever. By structuring product data, governance and traceability, they automate more quickly, deploy internationally with fewer risks and secure their media investments.

Is there a need for dedicated AI governance in e-commerce?

Yes, as soon as AI is used on a large scale.

Clear governance makes it possible to define what can be automated, under what conditions, with what controls. It avoids opportunistic uses that generate legal risks, brand inconsistencies or bad buzz.

What is the biggest risk for online retailers today?

Think that AI is just a technical tool.

In reality, generative AI has become a central player in the e-commerce value chain, in the same way as pricing or merchandising. Underestimating it exposes it to legal, business and reputational risks that are difficult to catch up with.

Continue reading

Top 10 Global Marketplaces 2025 — A complete overview of global e-commerce

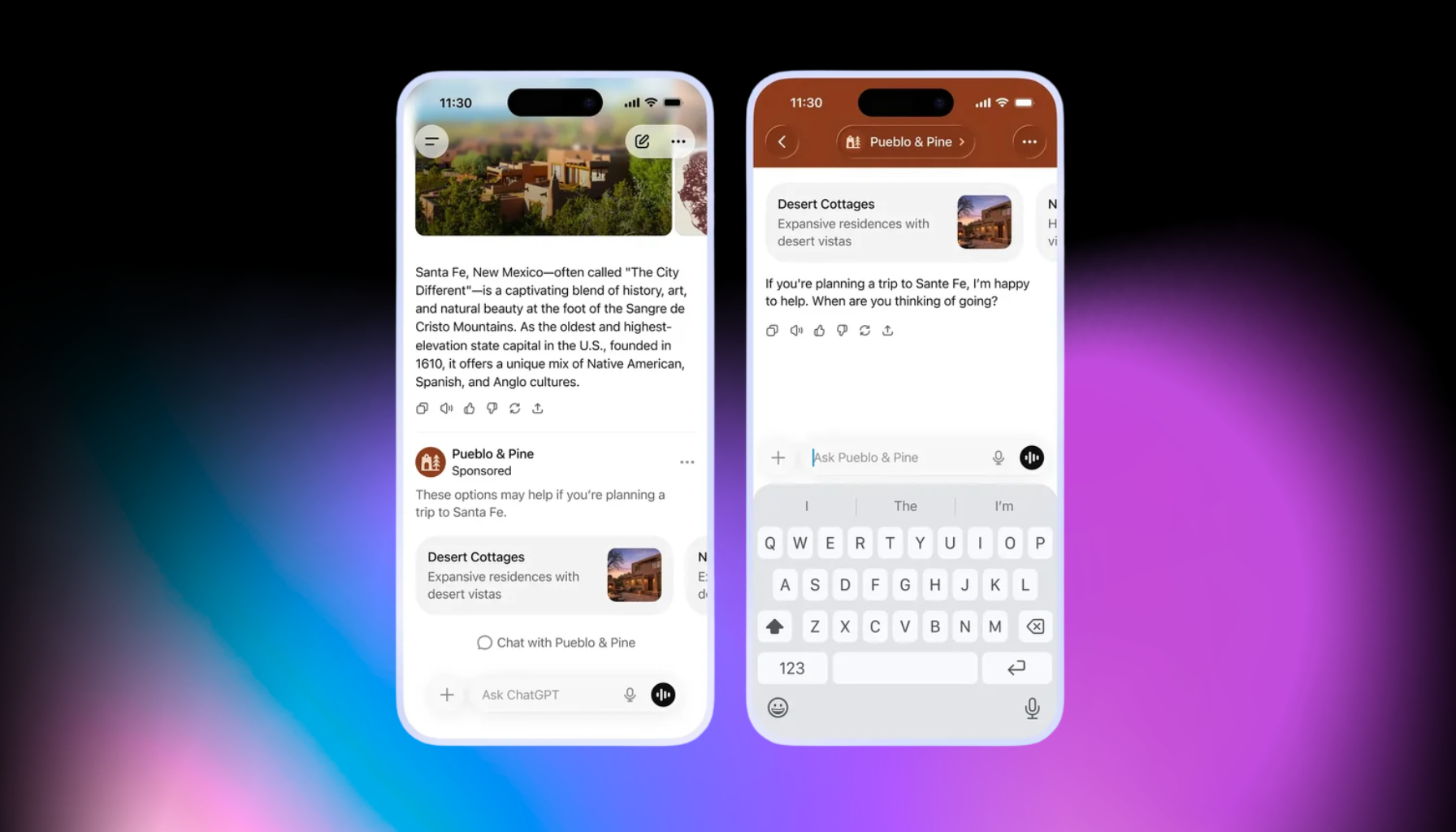

ChatGPT Ads: How Conversational Advertising Is Changing the Rules of Digital Marketing

Google UCP & OpenAI ACP: 2026 expert checklist to be ready for agentic commerce

.svg)