What is e-commerce A/B testing? Complete guide, methods and mistakes to avoid to double your conversions in 2025

A/B testing is a simple method of experimentation: comparing two versions of a page, journey, or campaign element to measure which one performs best. Applied to e-commerce, A/B testing helps to increase click rates, add to cart and conversion rates — without betting “by feeling”.

This is an essential data-driven approach, especially when media budgets are managed by automated campaigns (PMax, Demand Gen, Catalog Ads).

In this article, we see: the definition of A/B testing, the main types of tests, a step-by-step method, frequent errors (peeking, SRM, bad sizing) and how to articulate A/B testing with your product feeds and landing pages.

1. What is AB Testing?

Definition of AB Testing

A/B Testing, also called split testing or A/B testing, is a scientific experimentation method used in digital marketing 🧑🔬

Imagine comparing two versions of a web page or campaign item to see which one gets the best results. Each version is shown to a group of similar users. Performances are analyzed to determine which converts the most.

The principle is based on the collection of statistical data. By using metrics like click through rate, add to cart, or conversion rate, businesses can make informed decisions based on real facts, rather than assumptions.

AB testing methods help to continuously optimize pages and campaigns to maximize performance. In short, AB Testing is a scientific approach to improve the effectiveness of marketing strategies.

An A/B test randomly distributes traffic between an “A” version (control) and a “B” version (variation). We observe a main indicator (KPI) — e.g. cart addition rate, conversion, revenue per visit — until we have a sufficient sample to conclude. The objective: to decide, based on evidence, which version to deploy.

Note: Google Optimize was discontinued in 2024. Today, we rely on third-party platforms (client-side or server-side) and/or on the “Experiments” modules of advertising agencies (e.g. Google Ads) to test campaign variants.

For example in Dataïads, AB testing in Smart Landing Page Is to create two Landing variants from the same template:

- Variant A: “generic” title + best-selling merchandising

- Variant B: title “centered use” + merchandising “similar products + filters “-20% and +””

Activate a 50/50 split, set a “Add cart” primary KPI and monitor the stability of the results over 14 days.

Importance of AB testing for e-commerce

For e-commerce sites, AB testing is essential. It allowsoptimize every aspect of their website to improve conversion rates 📈

By testing different versions of product pages, landing pages, or action buttons, e-retailers can identify what best meets the expectations of their visitors.

AB Testing also helps reduce bounce rates and improve the user experience. By understanding what works and what does not work, e-retailers adopt optimizations based on the real preferences of users.

These improvements based on data analysis generally result in sales and revenue growth.

2. Why is A/B testing key for an e-commerce site?

A/B testing is a simple and powerful tool for improving performance without risky bets. It allows you to learn quickly, to decide based on evidence and to invest smarter.

By testing different versions of pages or items, e-commerce or media managers can accurately determine what works best for their specific audience.

- De-risk the changes. Instead of redesigning an entire page, we isolate a hypothesis and test it. You minimize the risk of losing traffic or sales.

- Continuous and measurable improvement. By testing variations of product pages, forms, or CTAs, you can identify exactly what's driving conversions up (and what's no use).

- Learn fast, decide fast. A/B testing reveals the ingredients that make the KPI move: title, visuals, arguments, filters, merchandising.

- Agility without IT burden. Modern experimental solutions make it possible to launch tests without mobilizing the technical roadmap. No more endless waiting requests. 😅

- Smart personalization. Test by segments (new vs recurring, mobile vs desktop, markets). Then deploy the right version for each audience to increase engagement, satisfaction, and loyalty.

- Invest your budget better. Each conversion point earned improves ROAS, especially with automated campaigns (PMax, Demand Gen). By identifying best practices for your audience, you maximize media impact.

Tip: combine A/B testing “pre-click” (titles, attributes, flow visuals) and “post-click” (landing, merchandising) to create a virtuous circle throughout the journey.

Example in Feed Enrich, set up two Title rules for the same product segment:

- Title A: “Brand + Model”

- Title B: “Brand + Model + Differentiating Attribute (material/use/actual color)”

Deploy two versions of the flow on 2 large product clusters and test their impact on CTR & CPC in Google. Replicate the winner in the global flow.

3. The 7 main types of tests (and when to use them)

There are several types of AB tests that online retailers can use to optimize their web pages and campaigns. Each type of test has its own advantages and can be adapted to suit specific business goals.

3.1 Simple A/B testing

Principle. Two versions of the same page or element (A vs B), traffic distributed randomly.

When to use it. Validate a single hypothesis (CTA label, block order, main visual).

To remember. It is the most used format: quick to launch, easy to read the results.

Dataïads app: Smart Landing Page

A/B on the Position of the “Promise + social proof” block :

- A: under the hero

- B: above the price/CTA block

- Primary KPI: cart addition rate. Secondary KPI: scroll depth.

3.2 Multivariate test

Principle. Several items vary at the same time (Title × Image × CTA) to measure their interactions.

When to use it. You have a lot of traffic and want to understand the combined effect of several components.

To remember. Very informative but traffic-intensive; to be avoided if the volume is limited.

Dataïads application: Smart Creative for Catalog Ads creations

Generate 4 variants created from the same product:

- Hero product vs lifestyle, with/without dynamic promo badge

- CTA “Discover” vs “Add to Cart”

Export to Meta/Google and read Asset uplifts (CTR, post-click CVR via Dataïads landing).

3.3 Split URL/redirect test

Principle. We compare two distinct pages (two URLs), often with very different templates (e.g. generic vs thematic landing).

When to use it. Test a radically new page concept, or a major redesign.

To remember. Ideal for “macro” changes in structure and UX.

3.4 Multi-page test (funnel)

Principle. The variation applies to several stages of the journey (listing + PDP + basket).

When to use it. You are looking for a global impact on conversion, beyond an isolated screen.

To remember. Measure the “end-to-end” effect and avoid local optimizations that degrade elsewhere.

3.5 A/A testing (calibration)

Principle. Two identical versions to check the tooling and the quality of the randomization.

When to use it. Before a test campaign, after a stack change, or in case of methodological doubts.

To remember. Detects measurement issues (SRM, tagging, targeting) before testing “for real.”

3.6 Real-time A/B testing

Principle. Changes applied on the fly via an experimentation tool, live performance monitoring.

When to use it. Fast adjustments (micro-copies, badges, highlights), commercial period, iterative tests.

To remember. Very agile; maintain strict governance (a primary KPI, defined decision windows).

3.7 Media asset/campaign tests (advertising agencies)

Principle. In Google Ads (Experiments) and other networks, we test variants of assets, targeting or bidding strategies with budget sharing.

When to use it. Evaluate Demand Gen ads, creative combinations, or strategies on PMax without breaking the history.

To remember. Perfect for linking pre-click (creation, targeting) and post-click (landing), and objectifying the media impact on conversion.

Tip: start with Simple A/B to secure quick wins, use the Split URL to test templates, then go to multivariate if your volume allows it. Calibrate regularly with a A/A and speed up “day-by-day” iterations with the Real time. At the same time, validate your assets and strategies directly into the control rooms via Experiments.

Use of Dataïads platform (Feed Enrich + Smart Creative +) Smart Asset + Smart Landing Page)

- Flux A vs Flux B (rich titles)

- Create A vs B (generated background vs studio background)

- Landing A vs B (similarly relevant merch vs “promos only”)

Read CTR, CPC on the control side and Add-to-Cart, Conversion, AOV Dataïads side.

👉 Also read: Advertising performance and user experience: towards reconciliation?

4. How to perform an effective AB testHow to perform an effective A/B test (8-step method)

To obtain significant results with AB testing, it is important to follow a rigorous methodology.

We have identified 8 key steps.

- Define 1 clear objective (primary KPI).

What do you want to improve 🎯? Example: “+10% addition to cart”, “−10% cart exit rate”, “+0.3 pt CR”. A single primary KPI to make unambiguous decisions. - Formulate the hypothesis (and the “why”).

Write what you think you want to improve and why:

Ex. “A more descriptive product title (material + use) will increase the rate of addition to the basket because it reduces uncertainty.”

Use data, customer feedback, or UX insights. - Select the variable (s) to be tested.

Choose the elements that support the hypothesis: title, image, CTA, order of the blocks, layout...

For a simple A/B, Change a single lever ; in multivariate, keep the number of combinations compatible with your traffic. - Create clean alternative versions.

Design A (control) and B (variation) in only changing the variable targeted. The differences need to be strong enough to influence behavior. - Size the sample (before launching).

Calculate the sample size according to your current conversion rate, theminimal detectable effect And the Statistical power. Also attach a minimum duration to cover cycles (weekdays vs weekends, operations). - Divide traffic & randomize neatly.

Divide the traffic (often 50/50) and monitor the SRM (Sample Ratio Mismatch): if the portion of traffic observed deviates from the plan (e.g. 55/45 instead of 50/50), stop and check tagging, targeting, redirections or bots. - Track and analyze without “peeking”.

During the test, check that each variant loads well and receives traffic. Don't stop as soon as a chart flashes “significant”: respect size + duration planned, or use sequential methods.

At the end, first analyze the Primary KPI, then the secondary impacts (AOV, speed, return rate, engagement) and the stability per segment (device, source, market). - Deploy the winner... and iterate.

Put the winning version into production, document the learning, and then move on to the next hypothesis. Optimization is continues (new visitors, loyal customers, new seasons).

Advanced bonus — Variance reduction (CUPED).

When your data is appropriate, reuse pre-experience signals to reduce variance, speed up testing and decrease sample size required.

Practical tip: keep a Checklist for each test (objective, hypothesis, design, size, duration, duration, stopping rules, SRM, segments, deployment criteria) and a Learning journal shared with the team.

👉 Also read: How to optimize the conversion of Google Local Inventory Ads ads?

5. Best practices in AB Testing

To maximize the benefits of AB testing, it is crucial to follow certain practices that have been proven to be effective 🌟

These recommendations will help you to obtain reliable results and to draw relevant conclusions to optimize your site on an ongoing basis.

Test one variable at a time

In theory, to obtain clear and accurate results, it is recommended to test only one variable at a time such as the title, image, or action button in order to determine its exact impact on performance.

Testing multiple items simultaneously can make it difficult to identify what actually influenced the results.

Because good methodological intentions are not always the ones that bring the most benefits or those that allow us to arrive at satisfactory results the most quickly, our Dataiads technology apply a hybrid approach.

At Dataïads, based on our experience supporting more than 200 e-commerce brands, we like First, carry out strategy tests which themselves may include multiple variables.

With this approach, the performance increments are quickly significant, strategic marketing lessons are quickly identified.

Once a significant impact has been achieved, we Let's refine variable by variable later adjustments based on the analytical results obtained.

To better understand this method, we recommend reading this article.

Ensuring the statistical validity of the sample

Launching an AB test means offering a web experiment to a part of the audience, or sometimes to all of them. In fact, it is interesting to target the population for a specific test.

However, it should be borne in mind that a sample that is too small can lead to insignificant and misleading results.

To be precise, Use a sufficient sample size to ensure that the results are statistically significant and reliable. You can use sample size calculation tools to determine the minimum number of participants needed.

Have an adequate test period

An AB test is generally carried out. over a fixed period of time, even short.

However, it is important to Give your test enough time to capture natural variations in user behavior.

Too short a length of time may not provide the complete picture, especially if your traffic fluctuates based on factors like the day of the week or current promotions.

Analyze data rigorously

Once the test is over, it is important to analyze the results carefully 👀

Take the time to decipher the results obtained and challenge them!

Use statistical methods to confirm the significance of the differences observed.

Don't rely solely on apparent variations and make sure the results are statistically valid before drawing conclusions.

Documenting and iterating

Finally, the AB test is a great way to set up a continuous progression.

To do this, record the results of each test, draw conclusions, and implement improvements.

Repeat the process with new tests for continuous and gradual optimization.

6. What to test first on an e-commerce site

6.1 Product Sheets (PDP)

- Rich and structured titles : Brand + Model + Differentiating attributes (material, use, real color).

- Visual gallery : main angle vs lifestyle, zoom, 360°, short video.

- Key arguments : concrete benefits, social proof, guarantees, delivery times and costs.

- CTA and price block : label, position, stickiness, stock/promo mentions.

6.2 Listings/results

- Scheduling (best sellers, new products, personalized relevance).

- Filters : presence, order, legible values (sizes, cuts, materials).

- Thumbnails : 1 vs 2 images, “promo” labels, review badges.

6.3 Media landing pages

- Alignment promise → content (same wording as the announcement).

- Contextual merchandising : similar products, variants, cross-sell.

- Vitesse : felt and Web Vitals.

6.4 Checkout

- Stages (1-page vs multi-stage).

- Means of payment (BNPL, wallet), auto-refill, clarity of fees

7. Common mistakes (and how to avoid them)

- Peeking/early stop : fix the analysis plan beforehand, or adopt a correct sequential method.

- Sample Ratio Mismatch (SRM) : if the observed distribution does not correspond to the plan (e.g. 55/45 instead of 50/50), investigate tracking, targeting, redirections, bots. Do not interpret the results.

- Undersized test : too small effect vs available traffic; use more sensitive estimators (CUPED) or report the minimum detectable effect.

- Multivariate without traffic : the number of combinations is exploding. Focus on a simple A/B test sequence.

- Bad KPI : optimize the conversion or the value, not just the click.

- Seasonality bias : let it run long enough to cover the cycles (weekdays vs weekends, commercial periods).

- Obsolete tooling : anticipate your platform choices and/or use Experiments modules in control rooms.

8. Linking A/B testing, product flow and Shopping campaigns

- Before the click (pre-click) : the quality of Product flow influences the eligibility and relevance of ads Of descriptive titles, completed attributes, rich visuals often result in a Higher CTR And a Lower CPC. Test title and image variants on the flow side (by creating them in a few clicks) with multimodal AI from Smart Asset), then replicate the winners in your campaigns, creates a virtuous circle.

- With Feed Enrich (Dataïads), you can industrialize these textual and visual variants from the flow, then A/B test their impact in PMax/Demand Gen via Experiments Google Ads.

- After the click (post-click) : the Smart Landing Pages Dataïads allow you to deploy fast, contextualized pages And ofiterate variants (merchandising, argument blocks, discount filters) to measure the real effect on cart addition and conversion — without monopolizing internal IT.

- Unified measurement : define your KPIs, your attribution window and your analysis method front the test. Think of the cross-channel consistency (PMax combines several inventories).

9. Operational checklist (copyable)

- 1 primary KPI, clear hypothesis.

- Volume adapted test design (simple A/B by default)

- Sample size and Stop rules fixed.

- Randomization + SRM monitoring.

- Dashboard Segments/Robustness activated.

- Deploy the winner in 1 click.

- Learning journal shared with the team.

- Boucle pre-click (Feed Enrich) → created (Smart Creative/Asset) → post-click (Smart Landing Pages), then insights are returned to the feed.

10. Express FAQ

Can I A/B test my Google Ads campaigns?

Yes. Use Experiments for assets, targeting, bidding, Demand Gen or Search — while reading the impact post-click Landing side.

How can I reduce the length of a test?

Increase traffic/expected effect or apply a variance reduction (e.g. CUPED) when your data is suitable.

A/B testing and customization, compatible?

Yes, if the segmentation is defined in advance and tested by segment (and not all at the same time everywhere).

What is an SRM?

One Sample Ratio Mismatch : the traffic share measured by variant does not correspond to the plan. It is a methodological red flag.

* * * * *

In conclusion, theA/B test is a powerful tool for any company looking to optimize its e-commerce site.

By using this method, you can improve conversions, personalize the user experience, and optimize your marketing budget.

Customer acquisition can be achieved on various channels, with adapted content and media budgets divided by level of profitability. In this acquisition strategy, the contribution of marketing ab testing, a tool for budget performance, should not be underestimated.

To find out how the Dataiads platform can help you get the most out of AB testing, feel free to contact us.

Our customers benefit Take advantage of our expertise with the Post Click Experience to transform your online performance and achieve your business goals.

Continue reading

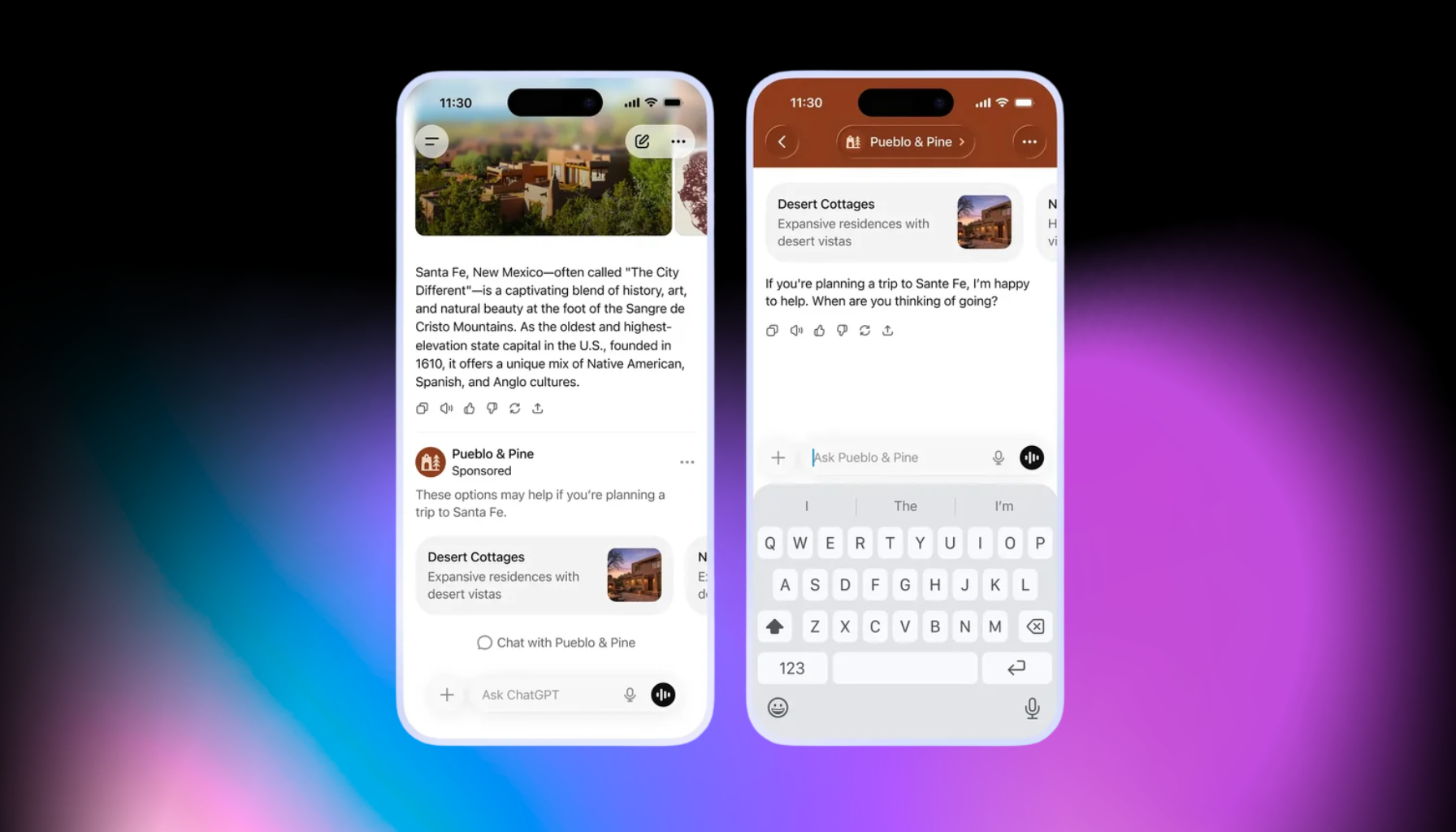

ChatGPT Ads: How Conversational Advertising Is Changing the Rules of Digital Marketing

Google UCP & OpenAI ACP: 2026 expert checklist to be ready for agentic commerce

Google's Universal Commerce Protocol (UCP): understanding the clickless commerce revolution and its challenges for e-retailers

.svg)